In the world of instructional design, people often toss around the words assessment and evaluation like they're the same thing. They're not. Getting them right is key to creating training that actually works, especially when leveraging modern tools and theories.

Here’s a simple way to think about it: an assessment is like a coach giving you tips during practice. It’s all about improving your game as you go. An evaluation, on the other hand, is the final score of the championship game. It tells you how well you performed overall.

To build a great learning experience, you have to know if your learners are actually learning and if your program is doing its job. This is where assessments and evaluations come in. Even though they’re a team, they have very different roles. One guides the journey, while the other judges the destination.

This isn't just a matter of wording—it completely changes how you design, deliver, and improve your training. The demand for solid proof of learning is exploding. In fact, the assessment services market, valued at around USD 2.63 billion, is projected to more than double, hitting an estimated USD 5.31 billion by 2032. This tells us one thing loud and clear: businesses are relying more and more on good data to measure learning outcomes.

Assessments are the little check-ins that happen during the learning process. Their whole purpose is to give instant, helpful feedback to both the learner and the instructor. Think of them as diagnostic tools that pinpoint knowledge gaps and weak spots right away.

Assessments are like the GPS in your car. They give you constant feedback—"turn left in 500 feet"—to make sure you stay on the right path to your final destination. They are formative, meaning they shape the learning as it unfolds.

This ongoing feedback loop makes learning personal and adaptive. For instructional designers working with tools like the Articulate Suite or Adobe Captivate, this looks like sprinkling quick quizzes, interactive scenarios, and knowledge checks throughout a course. These small check-ins help learners fix mistakes before they become bad habits. To see this in action, you can explore our guide on formative evaluation and learn more about measuring progress along the way.

To make this crystal clear, here’s a quick table breaking down the key differences.

As you can see, one is about the "how" (the process of learning), and the other is about the "what" (the final outcome). Both are essential for a complete learning strategy.

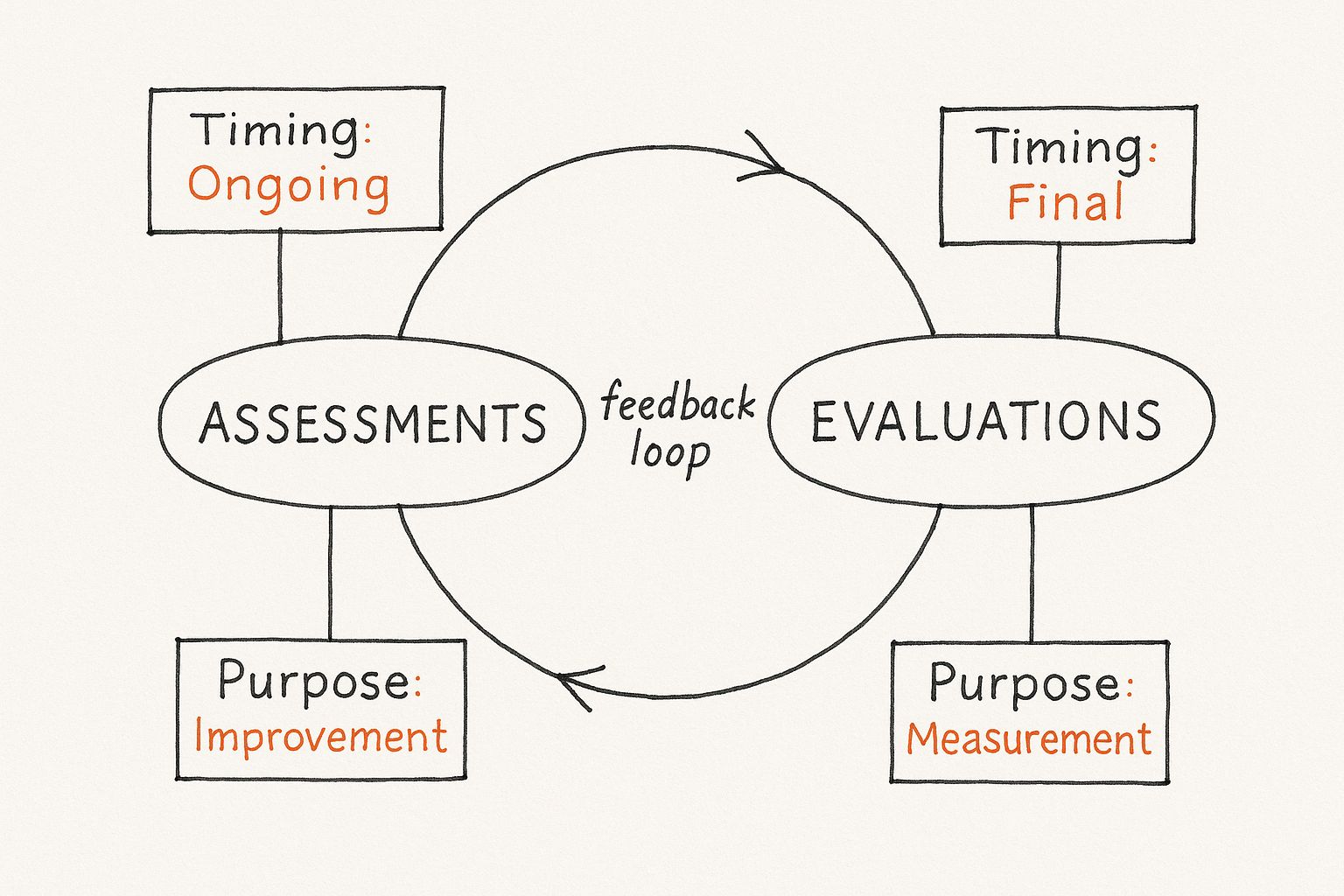

This infographic also does a great job of visualizing the core differences.

The image perfectly illustrates how assessments are ongoing tools for improvement, while evaluations are the final measure of what someone has learned. Together, they create a powerful feedback loop.

Okay, so we've nailed down the difference between assessments and evaluations. Now for the fun part: picking the right tool for what you're trying to teach. Let's be honest, we can do a lot better than the standard multiple-choice quiz if we want to measure skills that people will actually use in the real world.

Think about it like a carpenter's toolbox. You wouldn't use a sledgehammer to hang a tiny picture frame, right? In the same way, the assessment you pick has to fit the learning goal you're aiming for. Broadly speaking, you've got two main categories to work with: formative and summative assessments.

Formative assessments are all about checking in during the learning process. They’re the low-stakes, frequent pop quizzes and activities designed to give immediate feedback. The point isn’t to slap a grade on someone; it’s to guide them.

As an instructional designer, these are your secret weapons, especially when paired with modern learning theories like Microlearning.

Here’s what these look like in practice:

These quick, ongoing checks are a game-changer. They turn learning from a one-way street into an active conversation, showing learners exactly where they stand and what they might need to review.

This screenshot from Articulate Rise 360 shows just how many engaging, modern lesson types you can build, from interactive scenarios to simple knowledge checks.

The interface makes it incredibly easy for designers to weave these formative checks right into the course, keeping the whole experience dynamic and engaging.

If formative assessments are the helpful guide along the path, summative assessments are the final destination. They measure the big picture—whether a learner has truly grasped the overall objectives of a course. You’ll find these at the end of a unit, a module, or an entire program.

Think of summative assessments as the final "verdict" on a learner's mastery of the material. They're less about providing on-the-spot feedback and more about validating competence for things like certification. Naturally, they carry more weight.

Here are a few common examples:

The key is matching the assessment to the goal. If you just want a salesperson to memorize a new product's features, a series of formative quizzes will do the trick. But if you want to know if they can actually sell that product? A summative role-playing scenario where they pitch a mock client is a far better test of their true ability.

By mixing and matching both formative and summative approaches, you create a powerful learning experience that both supports and validates learning from start to finish.

Effective assessments and evaluations aren't happening on paper and with clipboards anymore. The entire game has changed thanks to a stack of smart tools that give us a much deeper, more accurate picture of what people actually know and can do.

This tech isn't just about speed; it’s about making measurement smarter, more interactive, and genuinely insightful. Think of it as the central nervous system for your whole training program—it connects your content, your learners, and your data into one seamless loop. Without it, even the most brilliant assessment design would fall flat.

At the core of almost every corporate training setup, you'll find either a Learning Management System (LMS) or a Learning Experience Platform (LXP). The LMS is the traditional workhorse. It's the central hub where you host, deliver, and track all your training content, keeping a record of who completed what, their quiz scores, and whether they’re up-to-date on compliance.

The LXP is the newer kid on the block, built around the learner's experience. It’s more like a "Netflix for learning," using AI to serve up recommended content from all over the place based on a person’s role, skills gaps, and interests. While they work differently, both are the launchpads for your assessments and the primary collectors of the raw data you need for any real evaluation.

A well-implemented LMS or LXP is your command center for learning measurement. It’s where you not only launch assessments but also collect the crucial data that tells you what’s working and what isn’t.

To make sure all that data gets captured correctly, these platforms have to speak the same language. That’s where technical standards come in. We put together a guide explaining what a SCORM package is to demystify how these different tools talk to each other.

So, your LMS delivers the training, but what about building the actual assessments? That’s where authoring tools like the Articulate Suite and Adobe Captivate shine. These are the creative sandboxes where instructional designers build everything from quick knowledge checks to complex, branching scenarios.

They let you go way beyond boring multiple-choice questions.

These are the tools that bring your formative and summative assessments to life, giving you the freedom to design measurement that actually reflects the skills you care about.

The most exciting thing happening in assessments and evaluations right now is easily the rise of Artificial Intelligence (AI). AI is quickly moving past simple automation and is starting to add a real layer of intelligence to the whole process. It’s making measurement more personal, efficient, and packed with insights we couldn’t get before.

This is a huge reason why the assessment services market is blowing up—it's projected to grow from USD 11.94 billion to a massive USD 26.56 billion by 2032, according to a recent market analysis. As AI gets smarter, it's being used to make sense of huge datasets, automate scoring, and give learners incredibly specific feedback.

Here’s where AI is already making a huge difference:

Pulling all these pieces together is what creates a modern, effective measurement system. Each technology plays a distinct but crucial role in moving beyond simple pass/fail scores to truly understanding and improving learner performance.

This combination of a solid delivery platform, powerful creative tools, and intelligent AI is the foundation of modern learning measurement. It’s how you build a system that doesn’t just test knowledge, but actively improves the entire learning experience.

Let's be real—the moment you say "test," you can almost feel the collective groan. Traditional, stuffy quizzes have done more to make people tune out than lean in. But it doesn't have to be that way. We can flip that script. Modern assessments and evaluations can actually be a motivating, engaging part of the whole learning experience.

The trick is to make them feel less like an interrogation and more like a game or a puzzle to be solved. It’s all about shifting the focus from "Did you memorize this?" to "How would you solve this?" That simple change is what turns a forgettable quiz into a genuinely powerful learning moment.

The single biggest pitfall I see is questions that just test rote memorization. People forget isolated facts almost instantly, but they remember how to solve a problem they've worked through. That’s why scenario-based questions are your best friend.

So, instead of a dry question like, "What are the three features of Product X?" frame it in a real-world context: "A customer is worried about security and asks how Product X protects their data. What are the three key features you would highlight to reassure them?" This tiny tweak forces the learner to apply their knowledge in a practical way, making the whole thing feel far more relevant.

The goal of a great assessment isn't to catch learners out—it's to build their confidence. When you test their ability to apply what they've learned, you're empowering them to walk away and actually use their new skills.

This approach doesn't just give you a better read on their understanding; it actively reinforces the training by tying it directly to tasks they'll face on the job. Once learners see that connection, their motivation shoots way up.

Nothing kills engagement faster than a wall of text. Thankfully, modern authoring tools like the Articulate Suite and Adobe Captivate make it a breeze to weave in rich media. This isn't just about making things look pretty; it's about creating a more immersive and memorable experience.

Here are a few simple but effective ideas:

Little touches like these cater to different learning styles and make the assessment feel more like an interactive experience and less like a static exam.

One of the most common missed opportunities is lazy feedback. A simple "Incorrect" is a dead end. It doesn't help anyone learn. Truly meaningful feedback is what makes assessments and evaluations so powerful—it’s what closes the learning loop.

You have to explain the why. If someone gets a question wrong, the feedback needs to clarify the right answer and the logic behind it. For instance, instead of just "The correct answer is B," try something like: "The correct answer is B. While A is a good first step, B is the most critical because it directly addresses the customer's main concern."

Even better, give feedback for correct answers, too. Reinforcing why they were right helps lock in the knowledge and builds their confidence. Many modern LMS and LXP platforms are even using AI to deliver personalized feedback, figuring out a learner's specific misunderstanding based on their wrong answer and giving a targeted explanation. This turns a simple quiz into a personal coaching session.

Collecting data from your assessments and evaluations is actually the easy part. The real work begins when you have to turn that mountain of raw numbers into smart, practical decisions that make your training programs better. Without a plan, all that information just gathers digital dust.

Think of it like this: your data is a pile of ingredients on a kitchen counter. They have potential, but they won't become a meal until you actually start cooking. The goal is to move from just having data to truly understanding what it’s telling you about your learners and your course content.

Your first step is to start looking for patterns. Don't just glance at the overall completion rate and move on. You have to dig a bit deeper to uncover the real story hiding in the data.

For example, a high completion rate looks great on the surface. But what if the question-level data shows that 80% of learners are getting the exact same assessment question wrong? That’s not a learner problem; it's a content problem. It’s a huge red flag telling you that a specific section of your course is confusing or just isn't landing right. Time for a rewrite.

This focus on data-driven improvement is part of a much bigger picture. The global assessment services market has been growing for years, tied closely to workforce development. With the recent explosion in remote work, the demand for tech-based solutions has skyrocketed, pushing the market even further as companies need more precise ways to measure what their people know. You can discover more insights about the assessment services market on Statista.com.

To make sense of it all, you need a simple framework to turn your findings into concrete steps. To pull out reliable conclusions from what you've gathered, it's always a good idea to follow established data analysis best practices.

Here’s a straightforward, three-step process to get you started:

Evaluation data isn’t a final grade for your course; it's a diagnostic tool. It shows you exactly where to operate to make your training healthier, stronger, and more effective for every learner.

This approach changes everything. Evaluation stops being a final judgment and becomes an ongoing conversation. You're constantly listening to what the data is telling you and using those insights to refine and improve. This process is also fantastic for uncovering critical knowledge gaps across your entire organization. If you want to dive deeper, check out our guide on how to perform a skills gap analysis and use our helpful template.

Ultimately, this is how you prove the value of your programs. When you can walk up to stakeholders and show them that you used evaluation insights to increase course completion by 25% or boost post-training assessment scores by 40%, you’re no longer just talking about learning—you’re demonstrating a real return on investment.

Here’s where great learning design really comes to life: stop thinking of assessments and evaluations as separate, one-and-done tasks. Instead, imagine them as two connected gears in a powerful engine, working together to drive your training forward.

This isn’t about just checking a box at the end of a course. It’s about creating a constant feedback loop. Individual assessments give learners the real-time feedback they need to understand where they are and what to do next. Then, when you pull all that data together, the evaluation tells you what’s hitting the mark and what needs a serious rethink.

Think of it like an ongoing conversation. The assessments are the learner's voice, showing you exactly where they’re succeeding and where they’re getting stuck. The evaluation is your response, letting you fine-tune the content and your entire approach based on what you heard.

This back-and-forth ensures your whole training program gets smarter and more effective over time.

Of course, you can't improve what you don't measure. A great starting point is to figure out what your team actually needs. Using a resource like a training needs assessment template helps you get a clear picture from the get-go, making sure your efforts are focused where they'll have the biggest impact.

This is how you turn learning from a static information dump into a living, breathing experience that responds to people's needs.

Assessments and evaluations aren't the finish line; they are the engine of progress. When you embrace this cycle, you build learning that doesn't just inform—it adapts and evolves.

Ultimately, this commitment to a continuous loop is what separates the good instructional designers from the great ones. It’s about building something that’s not just informative but genuinely helps people grow. By listening to the story your data is telling and making thoughtful changes, you create a learning environment that empowers everyone.

Even after you get the hang of assessments and evaluations, a few questions always seem to pop up. Let's tackle some of the most common ones so you can move forward with confidence.

If you remember just one thing, make it this: think about when and why.

Assessment is for improvement while the learning is still happening. It's like a coach giving you pointers during practice. On the other hand, evaluation is for judgment after the learning is over—it's the final score of the game. One guides, the other grades.

Good news: you don't need a massive, custom-built AI system anymore. So many modern authoring tools and LMS platforms now have powerful, user-friendly AI features baked right in, making them perfect for smaller teams.

For instance, you can use these built-in tools to:

Tools like the Articulate Suite or Adobe Captivate, especially when used with a modern LXP, put this kind of tech within reach for any team. These features aren't just for the big guys anymore.

They absolutely do. In fact, you could say that microlearning and formative assessments are a match made in heaven. The whole point of microlearning is to deliver learning in small, digestible chunks, which is perfect for quick, low-stakes knowledge checks that reinforce ideas without bogging anyone down.

Think about it—every short module can end with a simple scenario, a drag-and-drop exercise, or even a single-question poll. The learner gets immediate reinforcement, and you get valuable data on what’s sticking. It’s a win-win.

This is a classic question, but it sets up a false choice. It's not about picking one over the other; you really need both for a solid learning strategy. They play different, but equally critical, roles.

Imagine it like this: Formative assessments are the rehearsals that build skills and confidence. The summative assessment is the big opening night performance that shows off what you've mastered. You can't have a great show without the rehearsals.

The best programs weave in a series of formative check-ins to guide learners and get them ready for the final boss. These little check-ins ensure that when the final summative assessment comes, they're ready to nail it. A healthy mix is always the way to go.

Ready to create learning that doesn't just engage, but actually works? Relevant Training helps small and medium-sized businesses develop and update eLearning content that delivers real results. Let's build a smarter training strategy together.